Sections

Story 1: Who plans better? You or LLM?

I was watching this interview on Machine Learning Street Talk where Prof Subbarao Kambhapati argued that LLMs don’t have reasoning capability and therefore can’t generate a stylistically correct, reasonable, and executable plan. He shared an example of planning a trip to Vienna from India (transcript slightly rearranged for coherence):

… you could have a correct plan that doesn’t have the right style. So I give this example of a correct travel plan to come to Vienna from India. Where I started is walk one mile, run for another mile, then bike for another mile, etc., etc. By the end of which indefinite number of these actions I will be in Vienna. That is sort of correct, but it is highly bad style.

Most people would not consider that as a reasonable travel plan because they tend to stick to like an airline flying or some other kind of standardized versions.

… (but) in the LLM modulo architecture (a framework he is proposing), we basically say, “please critique both the style and correctness”?. So the style is something that LLMs are actually better at critiquing. (But) the correctness is something that they cannot.

And in this other article in ACM, he gave an example of wedding plans:

Many of the papers claiming planning abilities of LLMs, on closer examination, wind up confusing general planning knowledge extracted from the LLMs for executable plans. When all we are looking for are abstract plans, such as “wedding plans,” with no intention of actually executing the said plans, it is easy to confuse them for complete executable plans. Indeed, our close examination of several works claiming planning capabilities for LLMs suggests that they either work in domains/tasks where subgoal interactions can be safely ignored, or delegate the interaction resolution (reasoning) to the humans in the loop (who, through repeated prompting, have to “correct” the plan).

When I digested these ideas, it seemed reasonable to conclude that yes, human can generate more reliable plans than LLMs.

But then the next day, my best friend was telling me about her sister’s trip planning skills. They were planning to meet their parents in Kuala Lumpur, Malaysia, where her sister will be travelling from Jakarta while her parents will be travelling from another city called Batam. They agreed on a date. Her sister suggested that they'd meet up there at a certain date, that her parents could figure it out -- taking the ferry, some ride-hailing services, or any public transports. As you might know, Kuala Lumpur is located on a completely different island than Java and Sumatera, where Jakarta and Batam are located respectively. And Jakarta, being the most developed city in Indonesia, has better accessibility than Batam.

Upon learning this, my friend who is more operational-minded raised objection to the hand-wavy plan. My friend was annoyed at how her sister overestimated the feasibility of the plan without thinking through the details and the different constraints they have, e.g. the parents’ language skills, mobility, and technical savviness to navigate the different hops required to actually get to the destination.

The point is obvious: not everyone can create proper executable plans. I’d even guess that there are more people without planning aptitude and logistical savviness than those with. Sure LLMs can't reliably generate executable plans without domain-specific training and tuning, but most humans also can't without experience, practice, and expert guidance. After all, that's what travel agents and travel guides are for.

Story 2: What do you mean by…? The lossiness of words as approximate symbols

Earlier today, I was quizzing my partner about whether he reached some technical decisions for his automation framework through intuition or reasoning. He insisted that he didn’t intuit it even though he wasn’t verbalising his decisions in his head while “thinking” about it, while that sounds like intuition to me. We ended that discussion realising that for him intuition means something he cannot articulate or explain his line of reasoning for even if he “knows” it’s true, while I consider intuition to include things you can and cannot ultimately verbalise with logic because we sometimes start from intuition and work our way backwards using logic and reason to further verify it with ourselves and others.

As Prof. Ashley Jackson is known to say, we might be laboring under the misapprehension... of using the same words with different meanings.

This reminds me of how just one month ago I was grouching over how LLMs are unable to “understand” that two concepts from two lists, labeled as the exact same words, are the same, and therefore unable merge them into one clean consolidated list.

Well, apparently words are indeed such lossy and unreliable approximation. It’s not LLMs’ fault that they don’t “get” things. We rarely do ourselves. Even though technically I think it is more fair to attribute this failure to LLMs because they are built to work with languages (and ultimately, any symbols) and should be able to process things much more literally and comprehensively.

Story 3: Riddle me riddle you

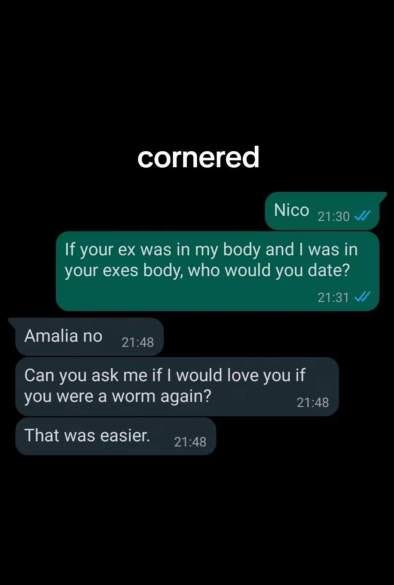

Yesterday I received this meme in one of my group chats. I immediately reacted with a laughing emoji and responded “there are two good answers for this, you know?” [3]. Only after I hit send, I realised I was actually thinking about another ex-joke. This one specifically: “If I was dying and the only way to save me was to kiss your ex, would you do it?“

I auto-completed the joke with the wrong punch line. I didn’t reason, I merely retrieved. Here I was deep in bot-mode.

While only 2 months earlier, LLM-skeptics were all over LLMs’ failure to reason through the man-and-a-goat-crossing-a-river riddle. Another riddle also came up: the boy and the surgeon. See footnotes[1] and [2] for more links to some fun tweets from the X-drama — both of those who bash and those who countered the bashing.

I’ve argued before that us humans often operate in bot-mode and that’s okay. But it’s so tempting to nitpick and jump at these “inferiorities” of LLMs. Unlike the fair “flaw” in story #2, they are ultimately not built for reasoning — at least not with the current techniques.

And I’d say rather than any actual flaws of LLMs themselves, it speaks more about the faulty thinking of those naysayers who mocked how “not AGI” LLMs are. And I throw the exact same sentiment to those who project any AGI potentials to these brilliant providers of potent substances for us to do our own verbal alchemy and eloquent-word-mashers. Like, don’t be silly y’all.

Sure perhaps now you might be thinking, “but but but, a human will ultimately be able to reason through the riddle once they realised what they’ve done, while LLMs cannot“. But can’t they really? And speaking personally, I’m not naturally good with riddles so I am certain that even me in my god-mode will not be able to compete against most LLMs’ responses, measured by the metric of time — even if they ended up merely retrieving their “line of reasoning” from some riddle-explainer corpus in their training dataset.

Closing thoughts

The past week or so the media seems buzzing with how we're now officially entering the trough of disillusionment with Generative AI. It's easy to be blinded by cynicism or hype. But at the end of the day, bubbles will need to burst and doomers and fanbois will need to meet reality where it's at before we are able to finally jiggle our way into the sweet spot for this technology and unlock its true power beyond its immediate promise of Capitalistic "productivity".

So, what are LLMs good for? I’m still walking around filtering my experiences through this question. And I’ll be reporting back here. So, stay tuned.

But let me hedge myself now and not romanticise and overintellectualise this quest and admit that it’s really not that complicated. LLMs in their current incarnation, are at its core, NLP engines on steroid. But I’d like to take on the challenge of following through that seemingly obvious fact into something more concrete we can all act on and profit from.

Originally published to Proses.ID.

Footnotes

Gary Marcus - A man and a goat crossing the river

Bashing

Countering

Riley Goodside - The boy and the surgeon

For those who's curious, the two good answers for this is (the latter video is in Indonesian but hey)

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser